前言

在写爬虫爬取github数据的时候,国内的ip不是非常稳定,在测试的时候容易down掉,因此需要设置代理。本片就如何在Python爬虫中设置代理展开介绍。

也可以爬取外网

爬虫编写

需求

做一个通用爬虫,根据github的搜索关键词进行全部内容爬取。

代码

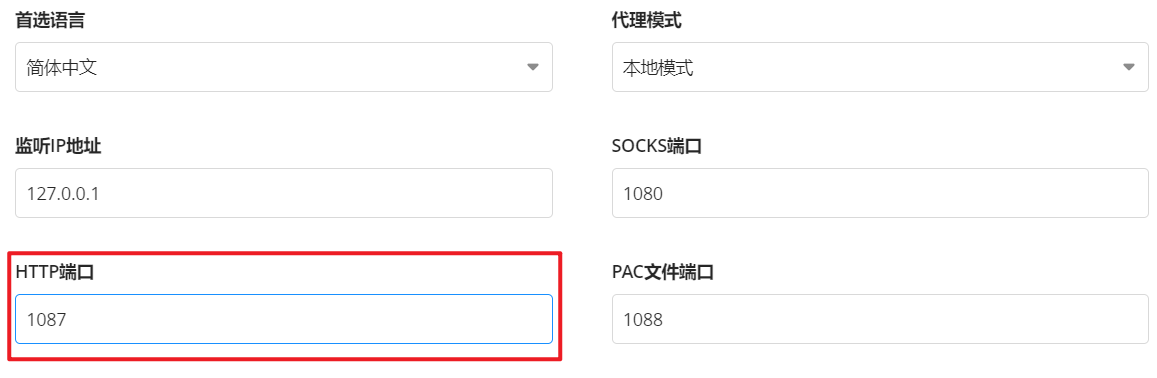

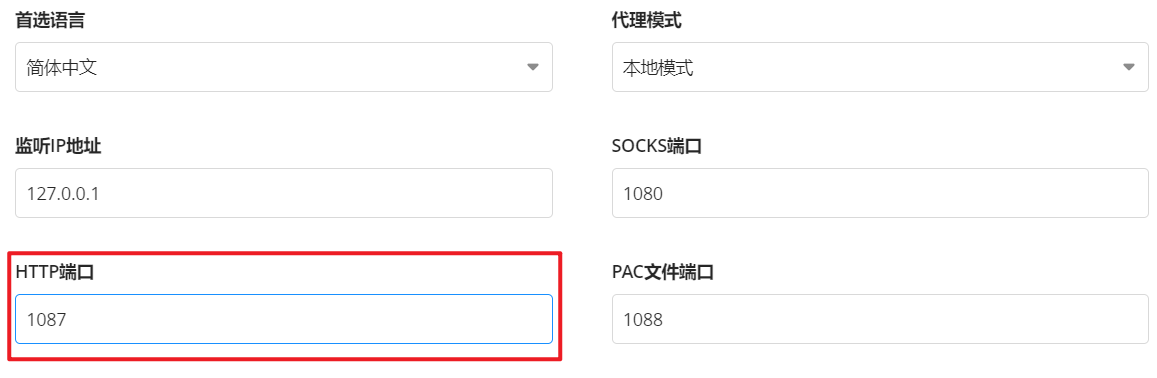

首先开启代理,在设置中修改HTTP端口。

在爬虫中根据设置的系统代理修改proxies的端口号:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

| import requests

from lxml import html

import time

etree = html.etree

def githubSpider(keyword, pageNumberInit):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.71 Safari/537.36 Edg/97.0.1072.62',

}

keyword = keyword

pageNum = pageNumberInit

url = 'https://github.com/search?p=%d&q={}'.format(keyword)

proxies = {'http': 'http://127.0.0.1:1087', 'https': 'http://127.0.0.1:1087'}

status_code = 200

while True and pageNum:

new_url = format(url % pageNum)

response = requests.get(url=new_url, proxies=proxies, headers=headers)

status_code = response.status_code

if status_code == 404:

print("===================================================")

print("结束")

return

if (status_code == 429):

print("正在重新获取第" + str(pageNum) + "页内容....")

if (status_code == 200):

print("===================================================")

print("第" + str(pageNum) + "页:" + new_url)

print("状态码:" + str(status_code))

print("===================================================")

page_text = response.text

tree = etree.HTML(page_text)

li_list = tree.xpath('//*[@id="js-pjax-container"]/div/div[3]/div/ul/li')

for li in li_list:

name = li.xpath('.//a[@class="v-align-middle"]/@href')[0].split('/', 1)[1]

link = 'https://github.com' + li.xpath('.//a[@class="v-align-middle"]/@href')[0]

try:

stars = li.xpath('.//a[@class="Link--muted"]/text()')[1].replace('\n', '').replace(' ', '')

except IndexError:

print("名称:" + name + "\t链接:" + link + "\tstars:" + str(0))

else:

print("名称:" + name + "\t链接:" + link + "\tstars:" + stars)

pageNum = pageNum + 1

if __name__ == '__main__':

githubSpider("hexo",1)

|

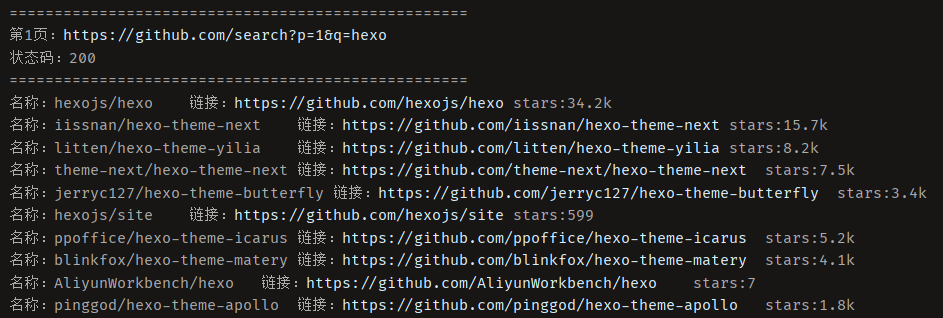

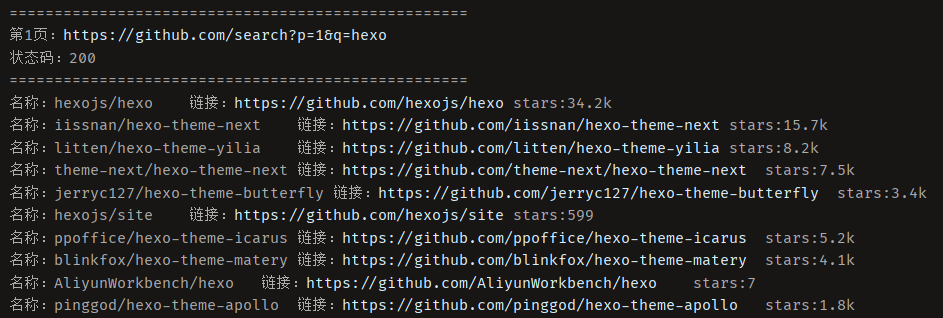

爬取结果如下,包含搜索结果的名称、链接以及stars:

后记

爬取外网的简单测试,状态码:

1

2

3

4

5

| import requests

proxies={'http': 'http://127.0.0.1:1087', 'https': 'http://127.0.0.1:1087'}

response = requests.get('https://www.google.com/',proxies=proxies)

print(response.status_code)

|